What are neural networks and how do they work?

The Mathematics Behind How Computers Recongise Things

How do we recognise things? It may seem too obvious to even answer a question like this as, in the end, we all are able to recognise a cat from a dog, a pencil from a pen, and a mountain from a building. However, perhaps the more interesting question here is what are the mechanisms behind being able to recognise these objects, and differentiate them from possibly quite similar seeming objects?

This is certainly not an article about the psychology of learning and development but rather the ways in which we can draw certain basic parallels between how we are able to learn, and how we could possibly teach machines, fittingly called neural networks, how to learn to recognise these objects on their own. Our brains contain an extensive library of images from cats to dogs to pencils to pens. We may not be able to perfectly remember the exact appearance of every single cat we have ever seen, and it would be a rather bizarre phenomenon if we could, but we are able to gauge a gist of what that cat looked like. This gist is likely some amalgamation of shapes, colours, shades and features that allow us to distinguish it from other four-legged creatures but we leave that to our brains to handle subconsciously, instantaneously.

When we build a neural network, we use this method same method in order to allow our machine to use data that we have given to it to learn for itself. The data we give to the machine is very important. When we want a machine to cram in as much learning as possible, we input what is known as test data. This data would contain the input such as the image of the cat, and the corresponding answer, ‘a cat’. If for instance we were trying to get our machine to recognise a host of different animals, we may input pictures of dogs, cats, elephants, and frogs, making sure to give the machine the correct answer to check if it was right. Other forms of data we could input is validation data which is periodically given to the machine to check its on the right track to learning, and test data which we may use towards the end to test the accuracy of our machine.

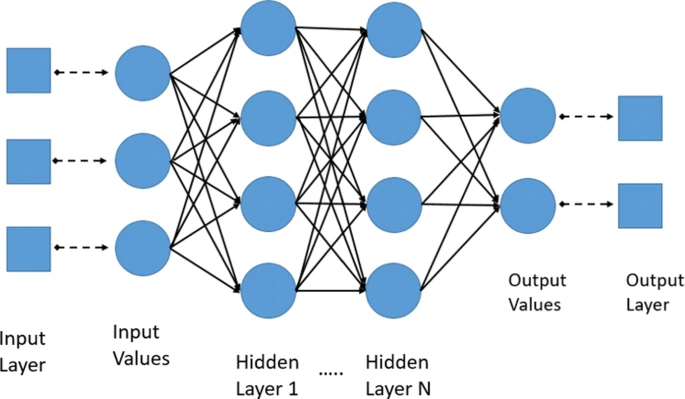

To build our neural network structure (known as the architecture), we follow on from a similar concept within the brain itself. Human brains, and any brain for that matter, are comprised of neurons which are small cells which contain information in the form of electrical signals. This information is passed onto various other neurons in a giant network comprising of billions of neurons. Not only do the neurons themselves contain information, but the synapses within the neurons contain unique information which allows messages to be translated across the brain from the input message to the correct output message. Neural networks are constructed in an identical manner except for the fact that these neurons are replaced with nodes (small pockets to store information) which are connected to other nodes electrically to form a similar structure. Information is passed from the first layer to the last layer, where each layer is comprised of various other nodes. The number of layers is known as depth and the number of nodes on each layer is known as width. Increasing the width and depth of a neural network creates more pathways for information, so information is processed more resulting in a more accurate answer.

Now, what you have eagerly been awaiting to know is how the actual process of learning works. The first process is known as forward propagation, who’s name becomes far more apparent within the mathematics itself, which is essentially the guessing step. The first issue for our neural network is how to receive the data itself in image form. For this to be rectified, the image must be first translated into some unique mathematical structure that our neural network can process, and for this we use a matrix. An image such as that of a cat, is comprised of lots of pixels arranged in a grid. We can take, for instance, a 70 by 70 pixel square image of a cat and translate each colour into a unique number e.g., black being 0, grey being 1, and white being 2, then create a matrix with dimensions 70 by 70 or 4,900 by 1 which translates into a lower resolution form of the image we have chosen. Choosing higher resolutions such as 700 by 700 and using a larger range of colours will give the neural network more information, and if the structure is sufficiently large to handle that amount of information, will greatly increase the accuracy of the final result. Using a 4900 by 1 matrix for each image has the added bonus of scalability, where you could process 100,000 images all at once by using a matrix with dimensions 4,900 by 100,000.

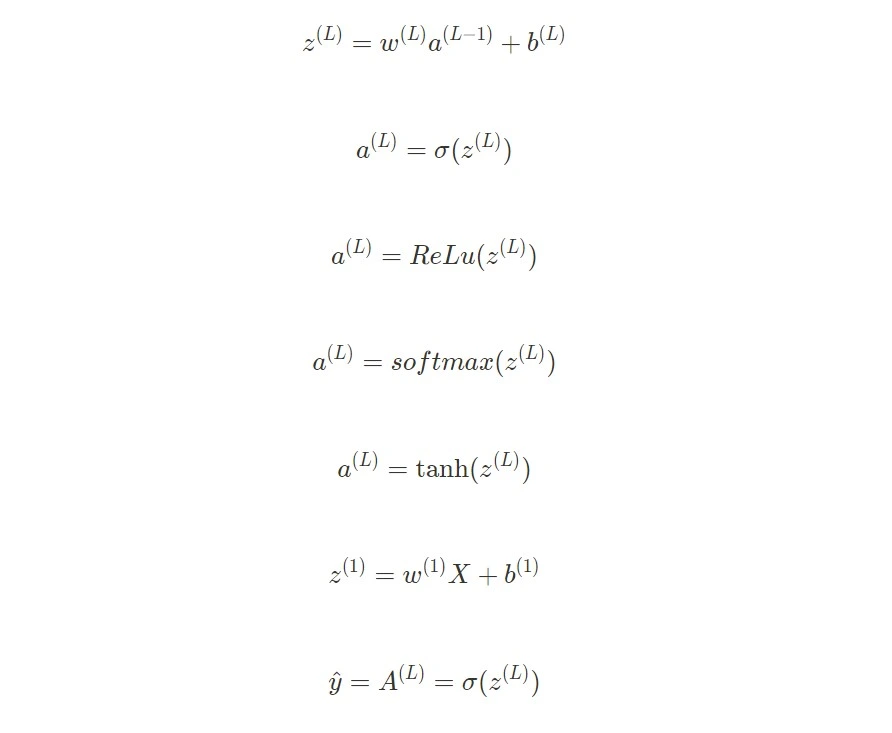

Once we have the image chosen, we can now allow our machine to attempt to guess the image. Notice how at first, the expected accuracy of the machine will be incredibly low as the machine has never seen a cat before so is unable to expect what the cat should look like. To allow our input, the image, manipulate into the output of the machine, the animal in the image, we must apply some mathematical operations to it – the easiest being addition and multiplication known as the bias and the weight. Biases use addition and become far less useful that weights as multiplication allows for a far more dynamic system where information is able to transform in, for a lack of a better word, a more organic way. For data to travel between nodes, a weight (W) and bias (b) is applied which results in an inactivated node (Z). The data must then be activated before reaching the next node which essentially involves using an activation function in order to equally lower all the values on the layer. If you consider the steps we are taking, addition and multiplication will mean that our values will very quickly become very large or very small and divert from one another, so an activation function such as tanh or sigmoid is used to squeeze the entire number line into various values between 0 and 1. With data between these bounds, our machine is able to make sense of the values far easier. You may think that these steps are unnecessarily tedious, but when you consider that we are perhaps working in mathematics involving hundreds of thousands of dimensions, this method is exponentially more efficient than the obvious square rooting or even dividing. When we first enter data into the network, we assign random values to all the various weights and biases which will change later on in the next step. If built correctly, the data should travel across all the different layers before reaching the output layer. The output layer, like all the others, is also a matrix. However, this matrix is, usually, a matrix with one column containing all the different probabilities for each of the possible output we would like the machine to recognise against, for instance, the probability that the image is a dog, a cat, an elephant, or a frog. Thus our 4 probabilities should all sum to one as we have defined that these are the only possible answers within our network and if someone was to input a picture of a rabbit, the machine would still attempt to assign one of the listed animals to that picture, despite being erroneous.

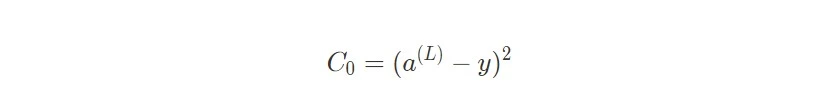

Now our machine has attempted to guess what the image is displaying. The following steps needed to take involve telling the machine the correct answer, comparing the machine’s output against the expected output, and making the necessary changes to the neural network in order to make sure that the next guesses are more accurate (i.e., the learning step). This entire process is encapsulated within the process of back propagation. The first step is creating the correct answer which very simply involves creating a matrix of the same dimensions to the output where the correct answer is given a 1 and all the rest are given 0s. If you reflect on what this is showing, it is essentially saying that the machine should have said that there was a 100% chance that it was a cat and a 0% chance that it was a dog or elephant or frog. The next step involved is comparing the machine’s results with the expected values. Mathematically speaking, we are trying to find the distance, or magnitude, between all the outputted values and the expected values. To do this we can simply take the difference, and then square the result in order to make all the values positive giving us the cost, or accuracy, function.

The final step is allowing our machine to learn. This step is both the most crucial and the most intuitively difficult to comprehend, so I will attempt to explain it both simply but also accurately. Now if we take a look at what values we have to hand, we have all of the various weights and biases across all the different nodes and the links between the nodes, and we have all of the accuracy scores in our cost function. We will not try and change the activation functions because they are simply there for our convenience and are not intended to be involved in the recognition step. What we are instead trying to assess, is how small changes in the weights and biases within our network affect our accuracies, and to find the most optimal values for our weights and biases which give the highest output. Accumulated across all of the thousands of images inputted into the network, refining these values to give the most accurate outputs is the key aim. That way when we test some data into our network, it will flow through all the layers in a particular way through all the weights and biases which will give the best possible answer. In mathematics, a similar concept in calculus can be used known as partial differentiation which looks at how small nudges in multiple dimensions affect the result. The following image is in 3 dimensions displaying how changing where you are on the ‘surface’ of a function changes the height of the point on all the troughs and peaks. In this case we are looking for the smallest possible height (at the bottom of these divots) which correspond to the lowest possible distances between the outputs and the actual answer (thus the highest accuracy). It is important to note that this image is only a model in 3 dimensions whereas our neural network is using partial differentiation in thousands of dimensions which is impossible to even attempt to comprehend.

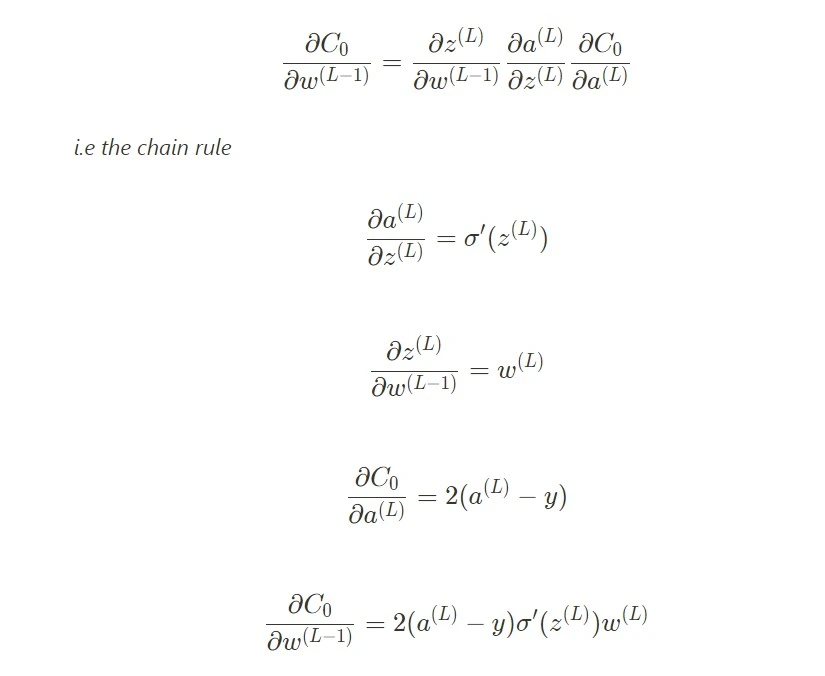

Instead of attempting to visualise such a phenomenon, we instead use mathematical notation which may at first seem daunting, but the more dimensions we are looking at, the more suitable the notation becomes. The weights (W) and biases (b) – which are less relevant so we will only concentrate on weights for now – are compared to the cost function (C) which tells us the accuracy. What we are assessing is how a small nudge to the weight (∂W) results in another small nudge to the accuracy (∂C). If this result is negative, we know that the this change is therefore decreasing the distance between the output and the answer so it is increasing the accuracy. Various tricks can be used within calculus such as the chain rule which essentially allows us to calculate these nudges by multiplying all of the other nudges that occur to values as a result of a nudge to the weight. When the value of the weight is nudged (∂W), the inactivated value is then nudged (∂Z) resulting in a nudge to the activated value (∂A), lastly resulting in a nudge to the accuracy (∂C).

I hope that the maths is not all lost on you already but if it is, don’t worry, as the main thing to take away from this is the basic premise of how we can take a fairly abstract concept such as learning and use some very clever mathematics to create a real life version of such mechanism. As you may already know, neural networks are used everywhere from facial recognition to brand recognition within social media pictures for marketing. Some have even used neural networks to learn stock patterns in order to estimate the best probabilities of each trade - the possibilities are truly endless!